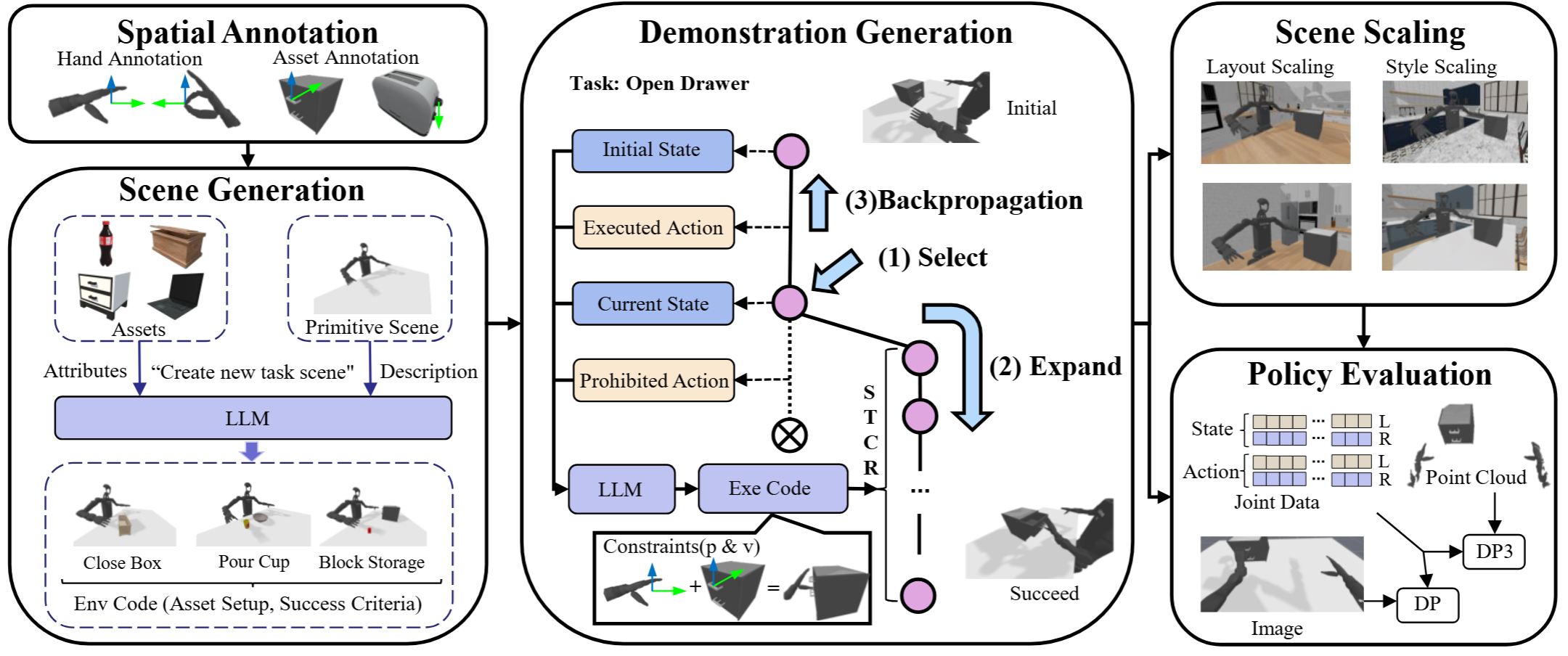

For robotic manipulation, existing robotics datasets and simulation benchmarks predominantly cater to robot-arm platforms. However, for humanoid robots equipped with dual arms and dexterous hands, simulation tasks and high-quality demonstrations are notably lacking. Bimanual dexterous manipulation is inherently more complex, as it requires coordinated arm movements and hand operations, making autonomous data collection challenging. This paper presents HumanoidGen, an automated task creation and demonstration collection framework that leverages atomic dexterous operations and LLM reasoning to generate relational constraints. Specifically, we provide spatial annotations for both assets and dexterous hands based on the atomic operations, and perform an LLM planner to generate a chain of actionable spatial constraints for arm movements based on object affordances and scenes. To further improve planning ability, we employ a variant of Monte Carlo tree search to enhance LLM reasoning for long-horizon tasks and insufficient annotation. In experiments, we create a novel benchmark with augmented scenarios to evaluate the quality of the collected data. The results show that the performance of the 2D and 3D diffusion policies can scale with the generated dataset.

HumanoidGen is an automated simualtion framework for scene generation, demonstration collection, and data generalization of bimanual dexterous manipulation, aiming to provide high-quality demonstrations over diverse scenarios to facilitate data scaling and policy learning. (i) As preparation, the assets and dexterous hands are meticulously annotated with spatial information. In scene generation, the LLM planner aims to generate an environment setup with code-form configuration based on asset, scene, and task descriptions. (ii) Based on the generated scenes and pre-defined hand atomic operations, the LLM proceeds to generate the planning code of a chain of spatial constraints for subsequent data collection. (iii) For tasks with long task horizon planning and insufficient annotations, we employ MCTS with introspective exploration to enhance the reasoning performance of LLMs. (iv) Then, we collect demonstrations by executing the generated code plan with scene scaling to enhance data diversity. These demonstrations are utilized to construct a humanoid manipulation benchmark for policy evaluation.

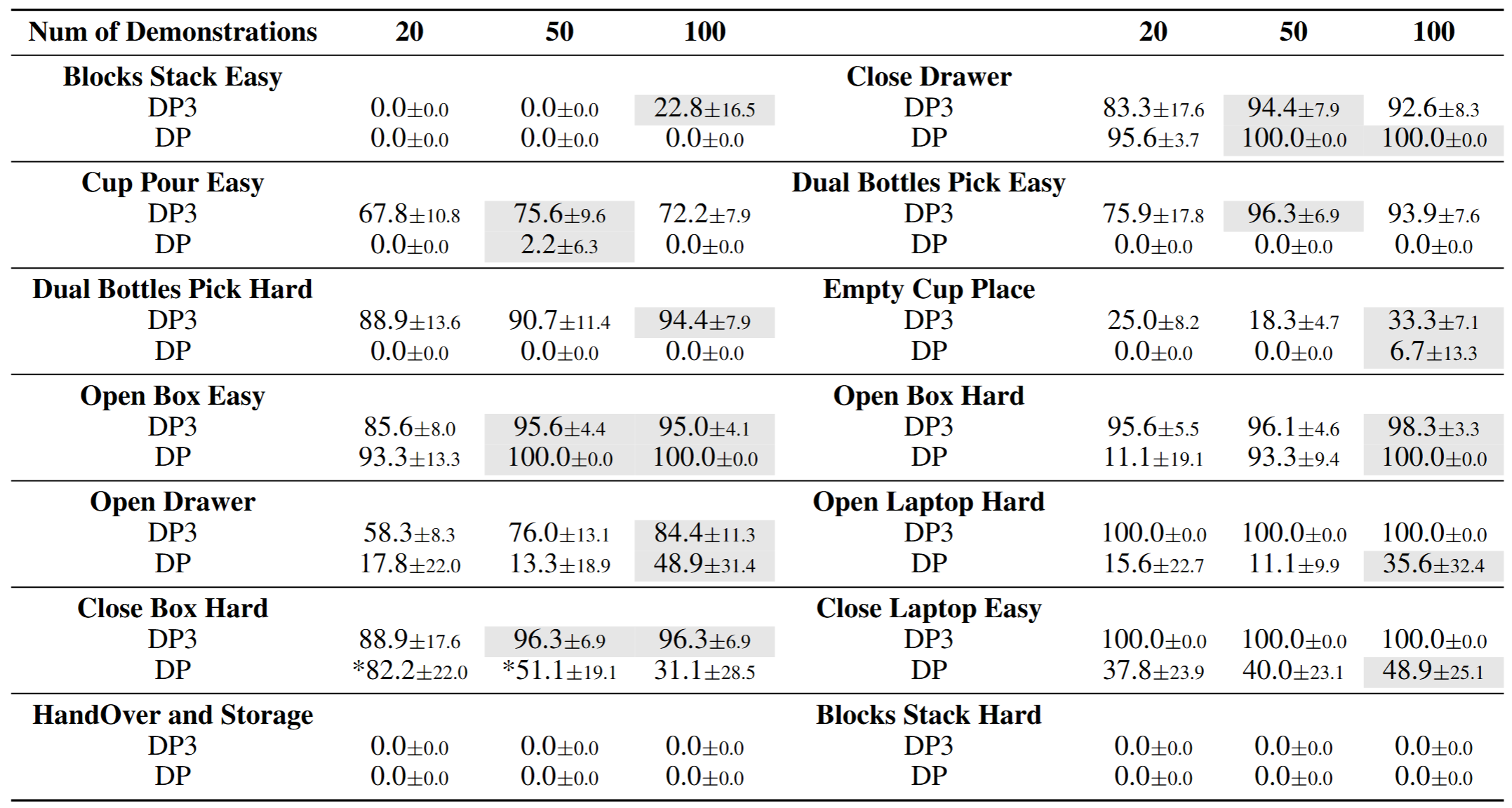

We designed 20 diverse tabletop manipulation tasks and used HumanoidGen to generate demonstrations for them. These tasks cover a wide range of dexterous manipulation scenarios, including single-arm and bimanual operations, long-horizon tasks, articulated object manipulation, and complex collision scenarios.

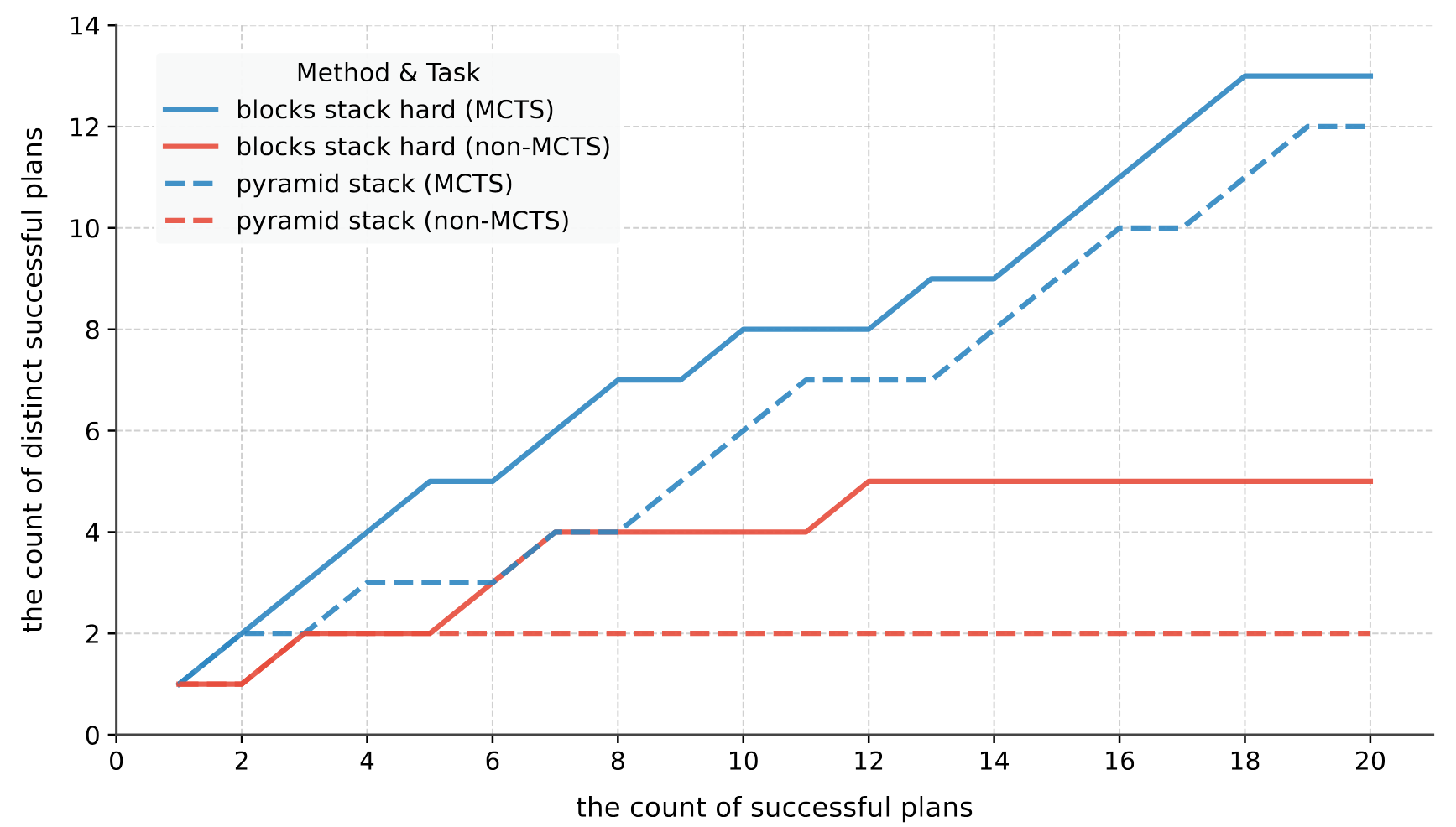

We enhanced HumanoidGen with MCTS to improve its demonstration generation capabilities. Three tasks from the aforementioned tasks, along with an additional single-arm task, are selected for the experiment. We increased the task complexity by (i) removing all operation annotations for cubes and (ii) simplifying task descriptions to provide minimal guidance.

@article{jing2025humanoidgen,

title={HumanoidGen: Data Generation for Bimanual Dexterous Manipulation via LLM Reasoning},

author={Jing, Zhi and Yang, Siyuan and Ao, Jicong and Xiao, Ting and Jiang, Yugang and Bai, Chenjia},

journal={arXiv preprint arXiv:2507.00833},

year={2025}

}